- Website

- Bookmark

- Share

- Claim listing

- Report

- prev

- next

You May Also Be Interested In

Seedance2 AI

- Turn Ideas into Cinematic Videos with One Click!

Video Generators

Paid

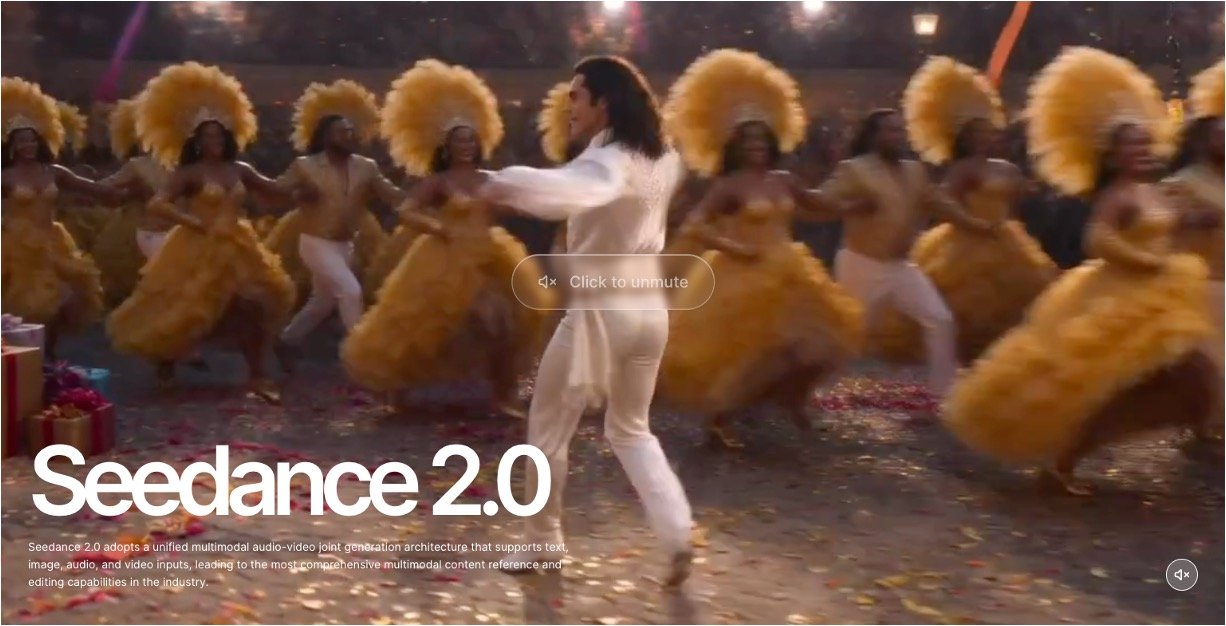

Seedance 2.0

- Multi-Modal AI Video Generator by ByteDance

Video Generators, Art Generator, Video Editing

Free Trial

Luma Labs Dream Machine

- Text a dream, watch it move like movie magic in minutes!

Video Generators

Freemium

Motion Control AI

- Animate Characters from Any Video

Video Generators, Text to Video

Free Trial, Paid

Genie 3

- Explore AI-powered realities in real time!

Video Generators

Freemium

Genmo AI

- Turn your words into stunning videos.

Video Generators

Free

Morph Studio AI Motion Control

- Direct AI videos with simple image + motion references.

Video Generators

Freemium

Joyfun AI

- Swap Faces, Animate Dreams.

Video Generators

Paid

Vheer Video AI Advice

- Transform your video ideas into reality with simple AI-powered guidance.

Video Generators

Paid, Free Trial

ZenCreator

- Create pro-level photos and videos in minutes.

Video Generators

Paid

SkyReelsAI

- Hollywood magic from your photos in minutes!

Video Generators

Paid

LitVideo

- Create Pro Videos in Seconds!

Video Generators

Paid